Some Unicode Notes

Update: I added another useful post about Unicode thanks to this tweet.

Every now and again I find myself reading up on character sets, usually when I'm doing some kind of heavy text processing.

When I first started out programming I used to tell myself, "Just use ASCII, it's not like I write programs for people who speak other languages right?". Well, as the Web became more multi-lingual, it's increasingly harder to justify that thinking.

Even if you are not writing non-English programs there may be other reasons you want to pay attention to character encoding. UTF-8 is apparently mandatory on the Web so having a basic understanding could be seen as foundational.

UTF-8 has some terms and notes I need to keep in mind:

- UTF-8 can use 1,2,3, or 4 bytes to encode a character, that is 8 bits, 16 bits, 24bits or 32bits,

- because of this it's called a variable width character encoding.

- A UTF-8 file that contains only characters in the ASCII range is identical to an ASCII file.

- The term Basic Multilingual Plane(BMP) or Plane refers to commonly used characters for all language scripts in the world.

- BMP consists of the code points

0000- FFFF(hexadecimal). - A breakdown of the groups can be found here.

Aside from UTF-8 there is also UTF-16 and UTF-32.

Both are not backwards compatible with ASCII. That is, if you convert an ASCII file to UTF-16 or UTF-32, it's contents at the byte level are going to change (but not the actual data).

UTF-16 is variable width like UTF-8 but uses either one or two 16 bit values for each code point. ASCII of course only uses one byte (8bits) for each of its characters, hence the backwards incompatibility.

I should mention that a code point is the numeric value assigned to a character in a character set. Think of a character set as a huge array where the code point is an index.

UTF-32 is fixed width and each character is encoded using 32bits (a whole 4 bytes!). Converting an ASCII file into UTF-16 or UTF-32 encoding is going to result in a larger size. That goes for databases as well.

I think what trips me up are statements like "JavaScript/ECMAScript supports UTF-8" that I saw online when I was first learning the language. It had me assuming that the escape sequences \u0000 - \uFFFF are how you use UTF-8 code points in strings.

I even wrote that here in an earlier draft.

That's not really accurate, all strings in JavaScript are UTF-16 encoded, or at least, each code point is represented by a 16 bit value as the spec does not get into the details of implementation. When a code point in the range 0xD800 - 0xDBFF occurs next to a code point in the range 0xDC00 - 0xDFFF it is called a surrogate pair and the spec describes an algorithim for calculating the resulting value.

The `\uXXXX` syntax is actually for representing characters of the BMP that may not be on your keyboard. At runtime, it's still a 16 bit value in memory.

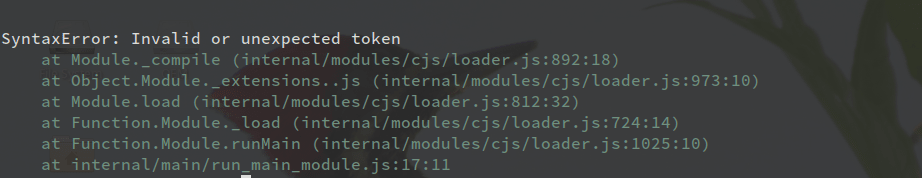

Where does UTF-8 come in? It's your actual source code! The JS files that you serve or pass to node are expected to be UTF-8 encoded! This is consistent with the requirement that everything be UTF-8 encoded.

There may be opportunities here for subtle bugs and even security issues but I have not taken the time to properly understand them yet. I do know that mixing up the encoding of your data in a MariaDB/MySQL server can cause your queries to give the wrong results.

I have also heard of XSS and SQL injection bypasses by manipulating misconfigured character encoding. I'm starting to get a better understanding as to how, but again I have not dug into it yet.

Here are some useful posts on Unicode and JavaScript:

JavaScript has a Unicode problem

Comparison of Unicode encodings

What every JavScript developer should know about Unicode

The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets